CCD VS CMOS

Which is better? It's complicated......

Much has been written about the relative advantages of CMOS versus CCD imagers. It seems that the debate has continued on for as long as most people can remember with no definitive conclusion in sight. It is not surprising that a definitive answer is elusive, since the topic is not static. Technologies and markets evolve, affecting not only what is technically feasible, but also what is commercially viable. Imager applications are varied, with different and changing requirements. Some applications are best served by CMOS imagers, some by CCDs. In this article, we will attempt to add some clarity to the discussion by examining the different situations, explaining some of the lesser known technical trade-offs, and introducing cost considerations into the picture.

In the Beginning

CCD (charge coupled device) and CMOS (complementary metal oxide semiconductor) image sensors are two different technologies for capturing images digitally. Each has unique strengths and weaknesses giving advantages in different applications.

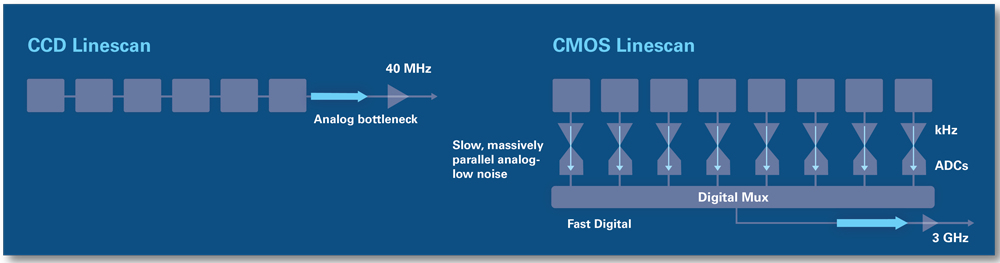

Both types of imagers convert light into electric charge and process it into electronic signals. In a CCD sensor, every pixel's charge is transferred through a very limited number of output nodes (often just one) to be converted to voltage, buffered, and sent off-chip as an analog signal. All of the pixel can be devoted to light capture, and the output's uniformity (a key factor in image quality) is high. In a CMOS sensor, each pixel has its own charge-to-voltage conversion, and the sensor often also includes amplifiers, noise-correction, and digitization circuits, so that the chip outputs digital bits. These other functions increase the design complexity and reduce the area available for light capture. With each pixel doing its own conversion, uniformity is lower, but it is also massively parallel, allowing high total bandwidth for high speed.

CCDs and CMOS imagers were both invented in the late 1960s and 1970s (DALSA founder Dr. Savvas Chamberlain was a pioneer in developing both technologies). CCD became dominant, primarily because they gave far superior images with the fabrication technology available. CMOS image sensors required more uniformity and smaller features than silicon wafer foundries could deliver at the time. Not until the 1990s did lithography develop to the point that designers could begin making a case for CMOS imagers again. Renewed interest in CMOS was based on expectations of lowered power consumption, camera-on-a-chip integration, and lowered fabrication costs from the reuse of mainstream logic and memory device fabrication. Achieving these benefits in practice while simultaneously delivering high image quality has taken far more time, money, and process adaptation than original projections suggested, but CMOS imagers have joined CCDs as mainstream, mature technology.